1. Examples of Concurrency¶

Example 1: Operating Systems¶

Historically, operating systems supported multiprocessing with a single processor:

- The processor switches among programs (processes) after a short time slice.

- When one program is blocked on I/O, the processor also switches to another program.

This provides the illusion that programs run in parallel. Programs may not directly interact, but need to sychronize access to common resources like a file storage, printer, or network. Thus concurrency and synchronization became part of books on operating systems and are still!

Book from 1983:

- Introduction

- Operating System Services

- File Systems

- CPU Scheduling

- Memory Management

- Virtual Memory

- Disk and Drum Scheduling

- Deadlocks

- Concurrent Processes

- Concurrent Programming

- Protections

- Design Principles

- Distributed Systems

- Historical Perspective

Example 2: Interactive Programs¶

From Android Guides:

The main thread ... is in charge of dispatching events and rendering the user interface and is usually called the UI thread. All components (activities, services, etc) ... run in the same process and are instantiated by default in the UI thread.

... performing long operations such as network access or database queries in the UI thread will block the entire app UI from responding. When the UI thread is blocked, no events can be dispatched, including drawing events. From the user's perspective, the application will appear to freeze.

Additionally, ... the Android UI toolkit is not thread-safe and as such you must not manipulate your UI from a background thread ... two rules:

- Do not run long tasks on the main thread (to avoid blocking the UI)

- Do not change the UI at all from a background thread (only the main thread)

Programs under Windows have a similar structure.

Example 3: Requirements Analysis¶

The Business Process Modelling Notation (BPMN) serves for describing the interactions between agents in business processss. BPMN diagrams are similar to flowcharts, but they are not meant to be executed, they only serve for documenting the setting for which software is to be developed. (BPMN elements are also supported in draw.io and Vizio.)

Other requirements analysis techniques are textual. Being able to express concurrency is essential as the surrounding world is concurrent.

Example 4: Parallel Computing¶

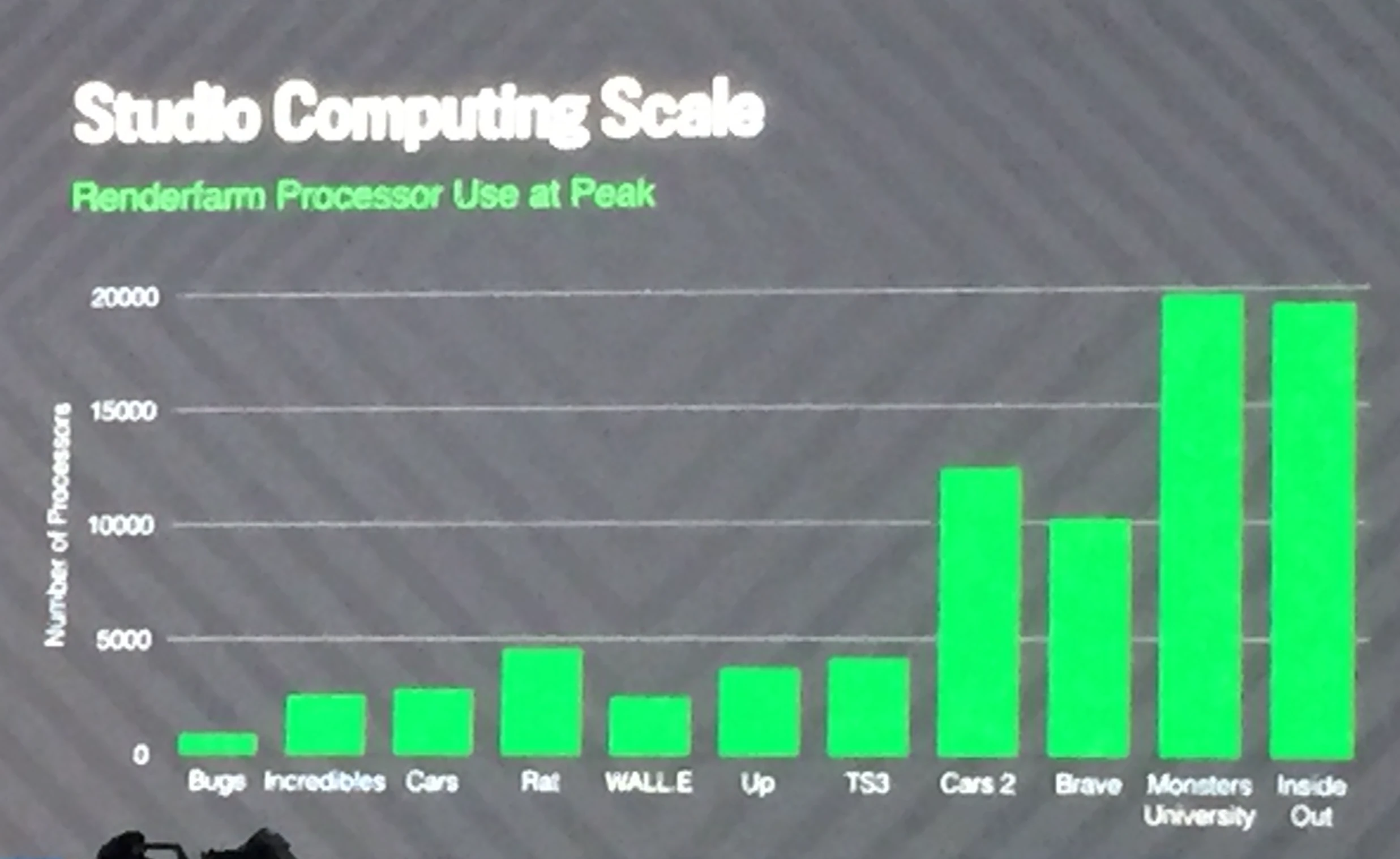

As light rays do not influence each other, all pixels of a frame and all frames of an animation can be computed in parallel. With 4K resolution (3840 × 2160 pixels) and 24 frames per second, a 110 min animation needs 1.3 × 10¹² pixels computed. For all rays needed for one pixel, the intersection of the ray with any object needs to be determined.

How Pixar brings its animated movies to life, Fortune, Sept 2015:

It took around 3,000 processors to render the movies The Incredibles and Cars, two films from the mid 2000s. For more recent films like Monsters University and Inside Out, that number has soared to around 20,000 processors.

All that extra processing power is noticeable when you study the hairs of the animated creature, Sulley, ... . When the original Monsters movie first appeared in 2001, Sulley had 1.1 million hairs covering his body. By Monsters University, released in 2013, Sulley had 5.5 million individual hairs.

Example 5: Software Design¶

Object-oriented design allows that the structure of program, the class hierarchy, reflects the structure of the problem domain: a description of the problem becomes part of the solution.

Simula-67, the first object-oriented languages, supported coroutines that allowed objects to be concurrent, particularly for simulations. Coroutines are scheduled cooperatively, meaning that transfer for control is explicit, unlike preemptively scheduled threads. (Coroutines are called fibers in Windows and are related to goroutines in Go; subsequent object-oriented languages, notably Smalltalk-80 and later C++, did not follow Simula in that respect.)

The overview to the right is for a simulation program for a post office: in principle, customers and clerks are all concurrent.

Embedded systems have to react to environmental events. As these can be independent, a natural structure is by having concurrent processes reacting to different kinds of events. Below is a statechart for an alarm (statecharts are related to UML state machines). For example, light and alarm status are independent and expressed as concurrent states, visually seperated by dashed lines.

Example 6: Server Architecture¶

An early server architecture is the three-tier architecture: presentation, application, and database servers are all concurrent. They may run on the same computer or on different computers.

(The architecture does not scale well beyond a certain size; data centers for cloud computing connect servers differently, with a "fat tree".)

Example 7: Protocols¶

By Rob Blanco - Own work, CC BY-SA 2.5 es

Bluetooth is a protocol for personal area networks (PAN) with a mesh topology: devices form piconets; devices have different roles, which changes as devices join and leave the network. All devices are concurrent and need to manage communication, including forwarding of messages to the right recipient.

Common themes¶

- Competition for shared resources, e.g. database, counter

- Communication between processes, e.g. between network devices

- Synchronization of processes, e.g. between OS services

- Fairness among processes, e.g. client requests

- Hierarchy of processes, e.g. in UI's

2. Why is concurrent programming hard?¶

Processes execute in a sequence of steps. Concurrent execution leads to interleaving of steps. For example, the parallel (concurrent) composition A ‖ B of processes A and B may result in:

| A | ➀ ➁ ➂ ➃ ➄ ➅ |

| B | ➀ ➁ ➂ ➃ ➄ ➅ |

| A ‖ B | ➀ ➀ ➁ ➂ ➁ ➃ ➂ ➃ ➄ ➄ ➅ ➅ |

Interleaving implies nondeterminism: different executions may lead to different interleavings. Interleavings may cause data races.

sell1: sell2:

r := sold ; s := sold ;

r := r + 1 ; s := s + 2 ;

sold := r sold := s

Question. Which amount does sell1 ‖ sell2 add to sold when run concurrently?

Answer. Either 1, 2, or 3.

Locking a variable (or any resource) gives exclusive access to that variable:

P: Q:

lock x and y ; lock x and y ;

x := x + 1 ; x := x + 2 ;

y := y - 1 ; y := y + 2 ;

unlock x and y unlock x and y

Variables x and y could be two bank accounts or the number of sold and available concert tickets.

Question. What could happen in P ‖ Q if P locks x, y and Q locks y, x in that order?

Answer. If P locks x and then Q locks y, a deadlock occurs: neither can proceed.

- Programs with data races or incorrect synchronization may compute wrong results, deadlock, livelock (infinite loop), or abort.

- Because of inherent nondeterminism, concurrent programs cannot be tested effectively.

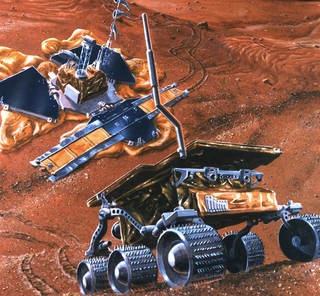

Example 1: NASA Mars Pathfinder¶

In July 1997, Pathfinder landed on Mars.

After a while Pathfinder stopped sending data and reset itself continuously.

After 18 hours the failure was reproduced in a lab replica: priority inversion, a form of starvation.

The system had a "watch dog" that discovered the situation and did a reset, and a reset, and a reset, …

The engineers managed to transmit code to Mars and execute it, to update the software. Testing during development did not reveal the error.

Example 2: Device Driver¶

Device drivers typically run in the operating system kernel and interface to mice, keyboards, drives, and other devices. As they run in the kernel, a faulty device driver can cause the whole operating system to crash.

- Around 2000, Windows shipped with 500 device drivers, most of them provided by device vendors. Reportedly, 80% of Windows crashes were traced back to faulty device drivers; concurrency errors (incorrect locking and releasing of resources etc.) were the most frequent.

- In the Linux 2.4.1 distribution, device drivers have 7 times more errors than the rest of the operating system. Among those, concurrency errors are the most frequent.

Example 3: Northeast American Power Blackout, 14 August 2003¶

World's second most widespread blackout in history:

- 12:15 p.m. Incorrect power flow telemetry in Ohio detected, but not properly corrected.

- 1:31 p.m. Eastlake, Ohio generating plant shuts down.

- 2:02 p.m. First 345 kV line in Ohio fails due to contact with a tree.

- 2:14 p.m. An alarm system fails at FirstEnergy's control room.

- 2:27 p.m. A second 345 kV line fails due to a tree.

- …

- 4:10 p.m. Ontario separates from the western New York grid.

- 4:11 p.m. The Keith-Waterman, Bunce Creek-Scott 230 kV lines and the St. Clair–Lambton #1 230 kV line and #2 345 kV line between Michigan and Ontario fail.

- 4:12 p.m. Windsor, Ontario, and surrounding areas drop off the grid.

- 4:12 p.m. Northern New Jersey separates its power-grids from New York and the Philadelphia area, causing a cascade of failing secondary generator plants along the New Jersey coast and throughout the inland regions west.

- 4:13 p.m. End of cascading failure. 256 power plants are off-line, 85% of which went offline after the grid separations occurred, most due to the action of automatic protective controls.

10 million people in Ontario and 45 million people in eight U.S. states without power

Task Force Report:

… a software bug in General Electric Energy's Unix-based XA/21 energy management system that prevented alarms from showing on their control system. This alarm system stalled because of a race condition. After the alarm system failed silently without being noticed by the operators, unprocessed events (that had to be checked for an alarm) started to queue up and the primary server failed within 30 minutes.

The event contributed to at least 11 deaths and cost an estimated $6 billion.

Example 4: Therac-25¶

Nancy Leveson and Clark Turner, An Investigation of the Therac-25 Accidents, 1993:

Some of the most widely cited software-related accidents in safety-critical systems involved a computerized radiation therapy machine called the Therac-25. Between June 1985 and January 1987, six known accidents involved massive overdoses by the Therac-25 - with resultant deaths and serious injuries. They have been described as the worst series of radiation accidents in the 35-year history of medical accelerators.

...

It is clear from the AECL [Atomic Energy of Canada Limited] documentation on the modifications that the software allows concurrent access to shared memory, that there is no real synchronization aside from data stored in shared variables, and that the "test" and "set" for such variables are not indivisible operations. Race conditions resulting from this implementation of multitasking played an important part in the accidents.

3. Why is Concurrent Programming Getting More Prevalent?¶

1. Increase in Internet Traffic (if it needs to be said)¶

Cisco Global Mobile Data Traffic Forecast, February 2019:

Global mobile data traffic grew 63 percent in 2016. The compound annual growth rate (CAGR) is predicted to be 46 percent from 2017 to 2022. (1 Exabyte = 10¹⁸ bytes.)

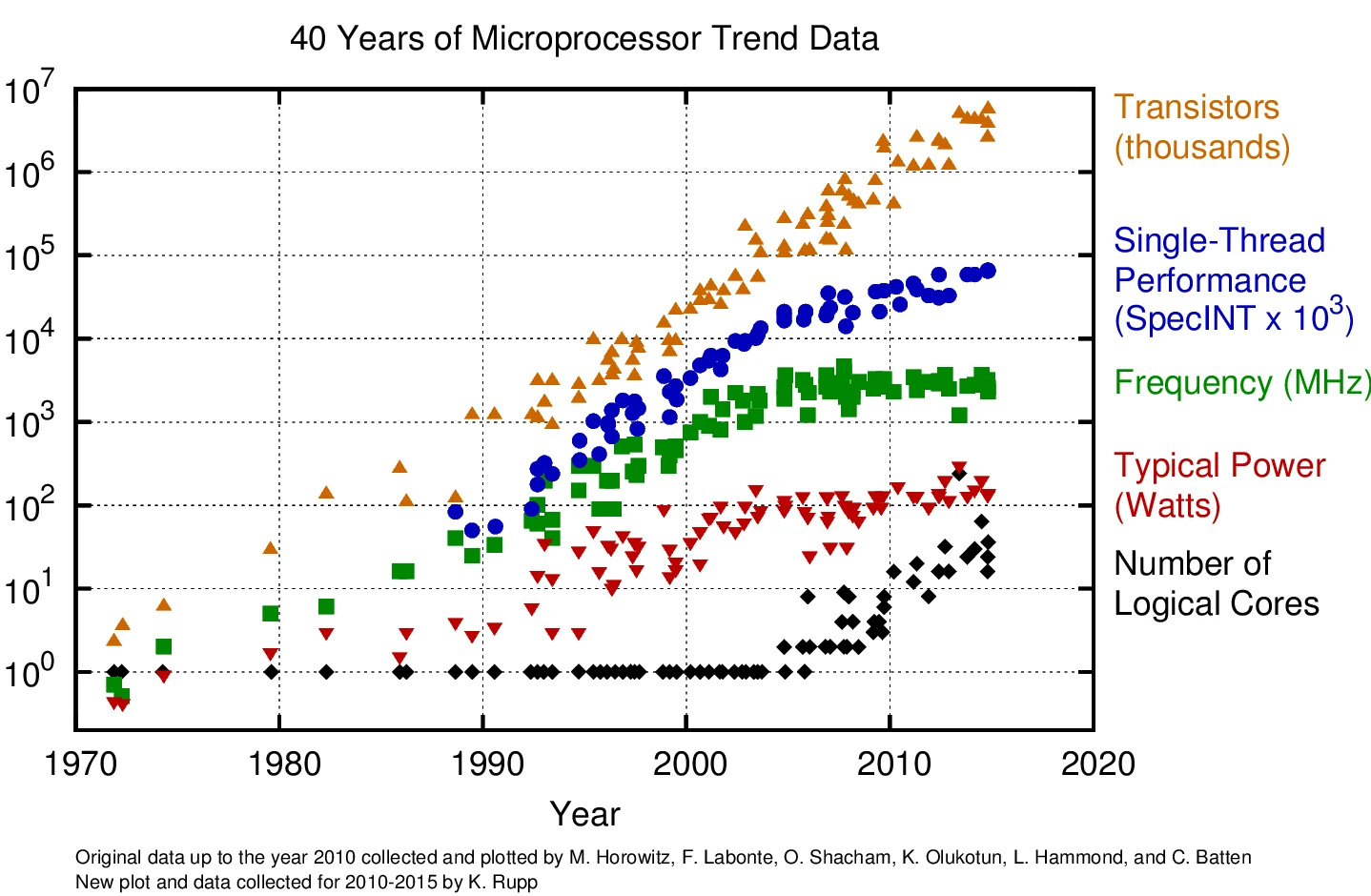

2. Increase in number of processor cores¶

Processor frequency is no longer increasing due to the power wall. For CMOS circuits:

Power = Capacitive load × Voltage² × Frequency

Single-thread performance is no longer increasing: the benefits of caching, pipelining, etc. are maxed out.

Number of transitors per processor is still increasing–linearly on a logarithmic scale, i.e. exponentially, doubling every 18 months as predicted by Gordon Moore.

This allow for more cores.

Further reading: Peter Denning and Ted Lewis, Exponential Laws of Computing Growth, 2017.

4. What can we do about it?¶

Libraries¶

Libraries hide some of the complexity for specific applications, e.g.

- efficient implementation of data structures: Java

- parallel computing: MPI (Message Passing Interface), OpenMP

- distributed computing: Akka for reliability and load balancing

These are useful in practice, but limited to the intended applications.

Verification tools¶

Microsoft's VCC is one such tool:

VCC is a mechanical verifier for concurrent C programs. VCC takes a C program, annotated with function specifications, data invariants, loop invariants, and ghost code, and tries to prove these annotations correct. If it succeeds, VCC promises that your program actually meets its specifications.

It's main use is for Microsoft's Hyper-V hypervisor. Try out VCC online at: http://rise4fun.com/vcc

Similar tools for Java and other languages exist. They are mainly used for highly-critical software, despite the fact that these tools dramatically reduce the time for testing and can even reduce development and maintenance effort.

Static analysis tools¶

Unlike verification tools, static analysis tools find errors without needing annotation. However, they can't find all errors (incomplete) and may produce false warnings (unsound).

For detecting concurrency errors in Windows:

- Device drivers have to pass the Static Driver Verifier (called so because there is also a dynamic verifier that detects driver errors at run-time), a static analysis tools that emerged from the SLAM research project.

- Visual Studio for C/C++ includes a tool for Code Analysis, which report numerous errors: the concurrency errors start at C26100.

For detecting concurrency errors in Java:

- NASA Java PathFinder: free, large, extensive checking

- ThreadSafe: newer, commercial, specifically for concurrency errors

- FindBugs: open source, several categories of errors: results from some applications

- IBM Concurrency Benchmark: a set of programs with concurrency bugs, to evaluate tools

Despite their drawbacks, static analysis tools have become popular in practice, e.g. https://scan.coverity.com/ as they still reduce testing time significantly, don't require training, and fit in existing development processes.

Dynamic analysis tools¶

ThreadSanitizer is one such tool, developed by Google, and included in clang:

ThreadSanitizer is a tool that detects data races. It consists of a compiler instrumentation module and a run-time library.

From a Google report "How Developers Use Data Race Detection Tools", 2014:

[ThreadSanitizer] regularly finds critical bugs, and is in wide use across Google ... . One interesting incident occurred in the open source Chrome browser. Up to 15% of known crashes were attributed to just one bug ..., which proved difficult to understand–the Chrome engineers spent over 6 months tracking this bug without success. On the other hand, the [ThreadSanitizer] team found the reason for this bug in a 30 minute run, without even knowing about these crashes. The crashes were caused by data races on a couple of reference counters.

ThreadSanitizer is included in Xcode. From Apple's developer documentation:

Running your code with Thread Sanitizer checks enabled can result in CPU slowdown of 2⨉ to 20⨉, and an increase in memory usage by 5⨉ to 10⨉.

New programming languages¶

- Go: message passing over sychronous and asynchronous channels, static analysis for race detection of shared variables.

Developed by Google; used by Netflix, many others - Erlang: functional concurrent language, actors with message passing over asynchronous channels.

Developed by Ericsson; used for WhatsApp (also here), FaceBook Chat, Amazon SimpleDB, Cisco Network Configuration - Scala: functional object-oriented language, futures for background computation, actors, data-parallel operations on collections.

Developed by EPFL; used for Apache Spark, Twitter, Duolingo - Clojure: functional concurrent language, message passing over synchronous and asynchronous channels, transactions for shared state.

Used at Walmart - Rust: has a type system with ownership to make both message passing and shared variables safer.

Developed by Mozilla for FireFox; used by Coursera, Dropbox, Samsung, ...

Further reading: Edward Lee, The Problem with Threads, 2006.

5. Dimensions of concurrency¶

In multiprogramming several concurrent processes may be executed by multiplexing processors. In multiprocessing several processors are sharing memory. In distributed processing there are several processors without shared memory.

Granularity of atomic operations can reach from nanoseconds (for arithmetic operations) to days. For very fine-grained concurrency the overhead of starting processes outweighs the benefit, for example evaluating parameters of the calls p(x + y, x - y) in parallel.

Coupling can be loose or tight. For very tightly coupled programs the overhead of communication and synchronization outweighs the benefit: for example, sorting an array with one process for each array element.

Coupling can be between processes can be independent, regular, or general.

- Independent concurrency: For arrays

a,b,c, the vector addition

algorithm

c := a + b

- Regular concurrency: For array

arepacing each element with the average of its neighbours

algorithm

a[i] := (a[i - 1] + a[i + 1]) / 2

Some compilers, notably Fortran compilers can recognize independent or regular concurrency and automatically generate code for vector processors that perform the same operation on a number of elements, leading to data parallelism.

The main concern for independent and regular concurrency is performance, for general concurrency it is correctness.

5. What this course is about¶

The practice of concurrency is changing quickly. The emphasis is on

- general concurrency (independent and regular concurrency in courses on parallel and distributed programming),

- the fundamentals of concurrency and seeing how they apply to current languages, and

- correctness of concurrent programs.

This notes use algorithmic notation for brevity and clarity in addition to examples in Python, Java, and Go. For example, setting x to 1 and in parallel y to 2 is expressed as:

algorithm

x := 1 ‖ y := 2

The assignment x := e, also written as x ← e, is read x becomes e or x gets e.

To illustrate the verbosity of programming languages, here is the same in Python, with a print statement added. For both assignments, classes need to be declared, objects created, threads started, and awaited for termination. (Select the cell and run the program with control-return).

from threading import Thread

class SetX(Thread):

def run(self):

global x

x = 1

class SetY(Thread):

def run(self):

global y

y = 2

setX = SetX()

setY = SetY() # create new threads

setX.start()

setY.start() # run threads

setX.join()

setY.join() # wait for threads to finish

print(x, y)

In Java, also classes need to be declared; additionally, exceptions need to be caught (select the cell and save the file with control-return; select the next cell and run the shell commands with control-return).

%%writefile SetXY.java

public class SetXY {

static int x, y;

public static void main(String args[]) {

class SetX extends Thread {

public void run() {

x = 1;

}

}

class SetY extends Thread {

public void run() {

y = 2;

}

}

Thread setX = new SetX(), setY = new SetY();

setX.start(); setY.start();

try {setX.join(); setY.join();}

catch (Exception e) {};

System.out.println(x + ", " + y);

}

}

!javac SetXY.java

!java SetXY

In Go, for each assignment a function needs to be declared, which below is anonymous. For awaiting the termination of both functions, a channel is introduced to which both functions send a dummy value; the main program waits for these values:

%%writefile SetXY.go

package main

import "fmt"

func main() {

var x, y int

done := make(chan bool)

go func() {x = 1; done <- true} ()

go func() {y = 2; done <- true} ()

<- done; <- done

fmt.Println(x, y)

}

Overwriting SetXY.go

!go run SetXY.go

1 2

%%writefile SetXY.c

#include <pthread.h>

#include <stdio.h>

int x,y;

void *SetX(void *args) {

x = 1; return NULL;

}

void *SetY(void *args) {

y = 1; return NULL;

}

int main(int argc, char *argv[]) {

pthread_t setX, setY;

pthread_create(&setX, NULL, SetX, NULL);

pthread_create(&setY, NULL, SetY, NULL);

pthread_join(setX, NULL);

pthread_join(setY, NULL);

printf("%d %d\n", x,y);

}

Overwriting SetXY.c

!gcc SetXY.c -lpthread -o SetXY

!./SetXY

1 1

We cover the main concurrency concepts:

- Nature of concurrency

- Mutual exclusion and condition sychronization

- Atomicity

- Safety, liveness, termination, deadlock, livelock, fairness

- Computer architecture and memory models

- Processes vs threads

- Critical sections

- Barrier synchronization

- Producers and consumers

- Readers and writers

- Bounded buffers

- Semaphores

- Monitors

- Message passing over synchronous and asynchronous channels

- Remote procedure call and rendezvous

The notes start with a review of program correctness.

Python and Java are used for semaphores and monitors, Go is used for message passing.

7. Recommended Reading¶

Concurrency¶

- Allen Downey, The Little Book of Semaphores, 2016: free book with a trove of examples

- Gregory Andrews, Foundations of Multithreaded, Parallel, and Distributed Programming, 2000: close to this course; get a used copy online

- Remzi Arpaci-Dusseau and Andrea Arpaci-Dusseau, Operating Systems: Three Easy Pieces, 2015: concise, free book; has one piece on concurrency with one chapter on concurrency bugs; uses C with Pthread

- Jeff Magee and Jeff Kramer, Concurrency: State Models & Java Programs, 2nd Edition, 2006: used in previous editions of this course; uses message passing from the beginning; here message passing is covered later

- Mordechai Ben-Ari, Principles of Concurrent and Distributed Programming, 2nd Edition, 2006: also close to this course, but goes into temporal logic and automated "model checking", which we don't

Python¶

- John Guttag, Introduction to Computation and Programming Using Python, 2nd Edition, 2016: focuses on methodological aspects rather than on the language; complements http://python.org

Java¶

- John Guttag and Barbara Liskov, Program Development in Java: Abstraction, Specification, and Object-Oriented Design, 2001: also focuses on methodological aspects rather than on the language

Go¶

- Alan Donovan and Brian Kernighan, The Go Programming Language, 2015: authoritative book on Go

- https://golang.org/doc/: official Go documentation

- https://github.com/golang/go/wiki/Learn: further online learning tools

8. How to fail (or do poorly) in this course¶

- Not attending classes (and not submitting M&Ms): there is a strong correlation between the number of submitted M&Ms and the grade in previous years. Even worse is faking M&Ms; we catch those.

- Not going to the tutorials for solutions of assignments or to ask questions: solutions are only discussed in tutorials; the TAs can't answer last minute questions by e-mail; proper explanations are too involved for communication by e-mail.

- Using another IDE and pasting the code into Jupyter notebooks: you can't get explanations on how to use Jupyter during tests; besides, you miss the points of "literate programming" and "reproducible research".

- Not completing the first two assignments and thinking to catch up: the grading scheme is meant to allow you to make errors and still do well in the course, not to skip work.

- Thinking that if your program produces the correct result it is correct: you may have been just lucky with nondeterminism. If it is not correct under all possible interleavings, it is incorrect and you get zero.

- Waiting until the night before the midterm test or final exam to study the material: much of the material is like mathematics; you can't understand mathematics overnight.