see also: Transformers. notes from (Elhage et al., 2021) and (Olsson et al., 2022)

virtual weights

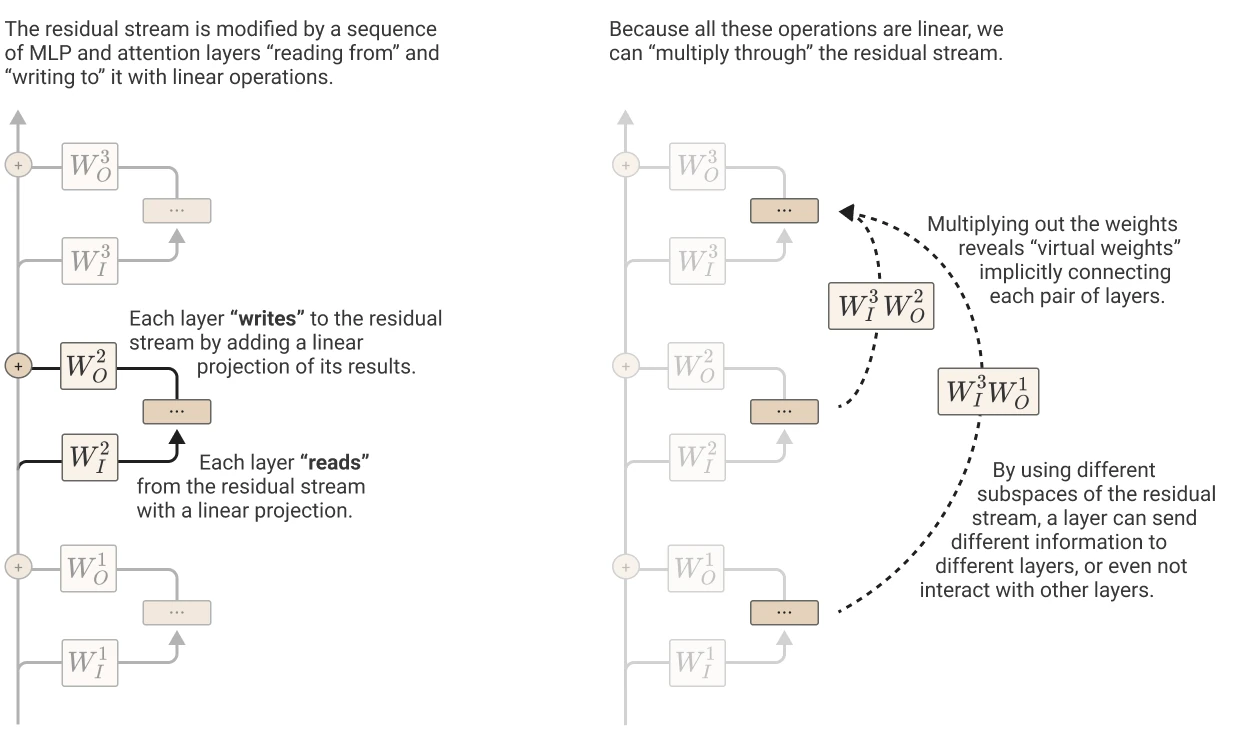

Each layer performing an arbitrary linear transformations to "read in" information, and performs another arbitrary linear transformers to "write out" back to the residual stream.

In a sense, they don’t have priviledged basis

One salient property of linear residual stream is that we can think of each explicit pairing as “virtual weights” 1. These virtual weights are the product of the output weights of one layer with the input weights of another (ie. ), and describe the extent to which a later layer reads in the information written by a previous layer.

priviledged basis

tldr: we can rotate it all matrices interacting with the layers without modifying the models’ behaviour.

Bibliographie

- Elhage, N., Nanda, N., Olsson, C., Henighan, T., Joseph, N., Mann, B., Askell, A., Bai, Y., Chen, A., Conerly, T., DasSarma, N., Drain, D., Ganguli, D., Hatfield-Dodds, Z., Hernandez, D., Jones, A., Kernion, J., Lovitt, L., Ndousse, K., … Olah, C. (2021). A Mathematical Framework for Transformer Circuits. Transformer Circuits Thread.

- Olsson, C., Elhage, N., Nanda, N., Joseph, N., DasSarma, N., Henighan, T., Mann, B., Askell, A., Bai, Y., Chen, A., Conerly, T., Drain, D., Ganguli, D., Hatfield-Dodds, Z., Hernandez, D., Johnston, S., Jones, A., Kernion, J., Lovitt, L., … Olah, C. (2022). In-context Learning and Induction Heads. Transformer Circuits Thread.

Remarque

-

Note that for attention layers, there are three different kinds of input weights:. For simplicity and generality, we think of layers as just having input and output weights here. ↩