see also: github

TLDR: Most algorithm determine importance through aggregating attentions over observed queries (Liu et al., 2023; Zhang et al., 2023)

More recent work aggregated attention from limited observation windows (Cai et al., 2024; Li et al., 2024)

uses top_k to find -indices of attentions per head to preserve, and evict the not-so-important ones.

idea.

Look at past attention weights for each pair of key and value vectors (a measure of the degree with which that KV’s representation has been queried during past attention operations)

Then select the KV with the least attention to evict

Think of LFU (least frequency used) cache management policy

the KV cache for each sequence in a particular layer is allocated on the GPU as a # attention heads sequence length tensor.

Important

total memory allocation scales with the maximum sequence length for all attention heads of the KV cache

Adaptive KV-cache compression

See also paper![]() (Ge et al., 2024)

(Ge et al., 2024)

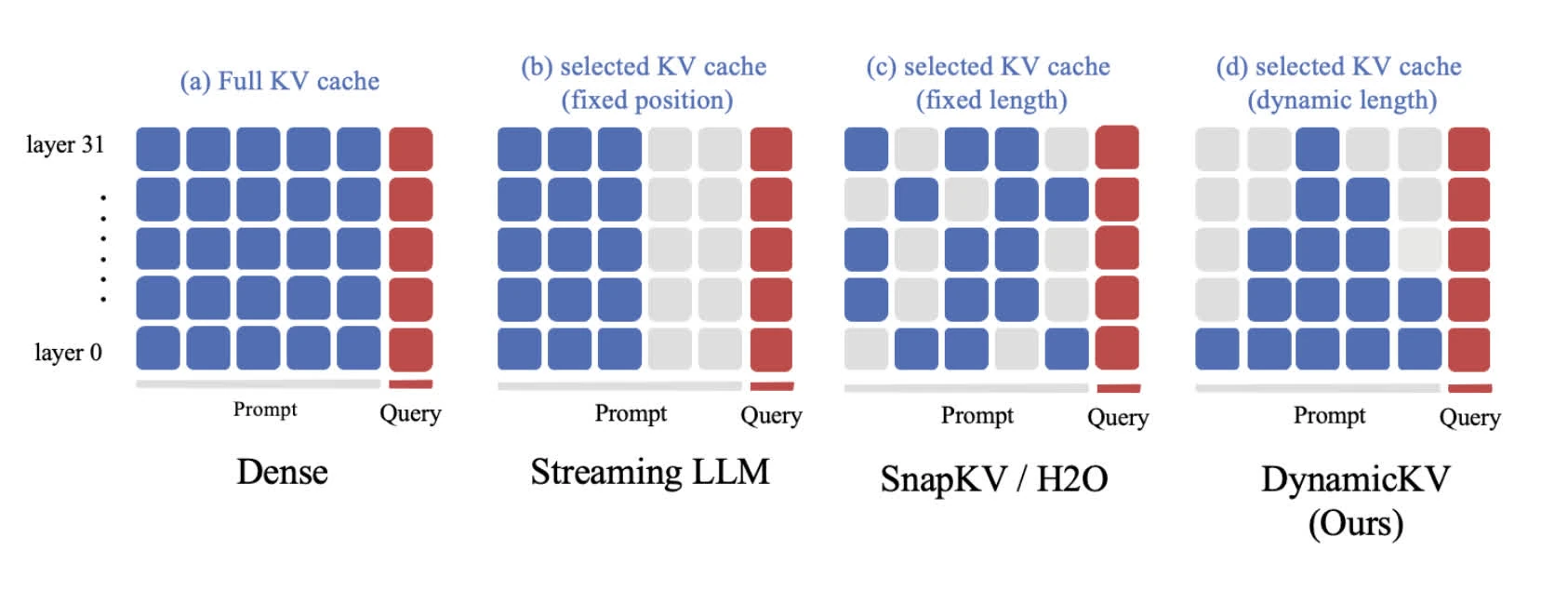

Streaming LLM

Using attention sink

see also paper![]() (Xiao et al., 2024)

(Xiao et al., 2024)

Ablate attentions among layers that deemed to be less valuable to current generations.

Pyramid-KV

See also paper![]() (Cai et al., 2024)

(Cai et al., 2024)

Snap-KV

See also paper![]() , github (Li et al., 2024)

, github (Li et al., 2024)

Voting: calculating attention weights for each query within observation windows across all attention heads, then aggregate to highlight prefix positions. Formally for a single batch:

hijack for llama_hijack_4_37.py

Important

is defined as , where is the compression rates.

Hit Rate: essentially the attention features above a predefined threshold to be important features.

The idea is to have two stages:

-

Vote for important features: select important features based on important features given fixed windows.

-

Update and store the compressed KV: concat attention features within the windows and update the KV-cache.

-

clustering via pooling ⇒ frequent hit-rate attention

attn_cache = pool1d(attn_weights_sum, kernel_size=kernel_size, padding=kernel_size//2, stride=1)

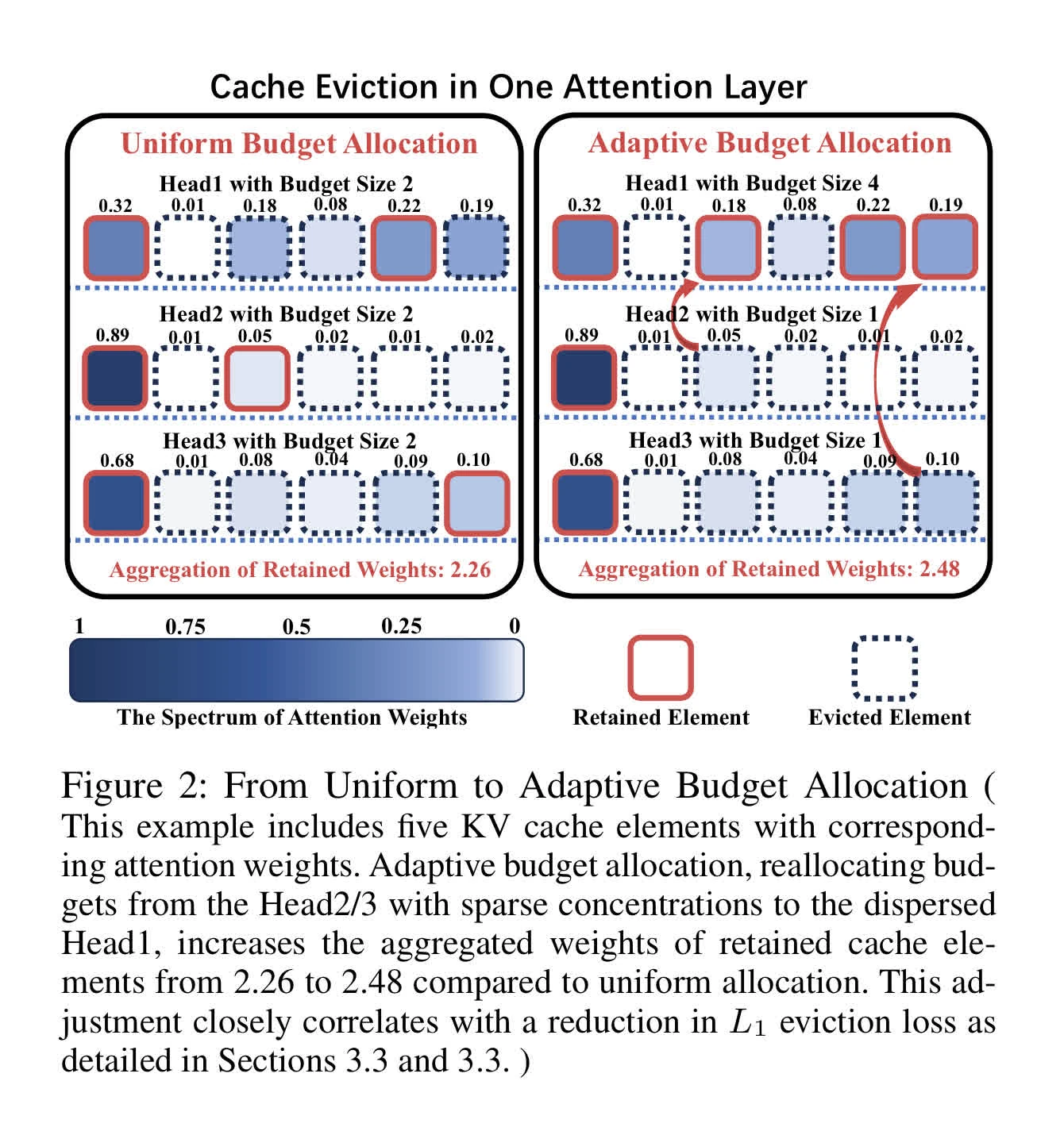

Ada-KV

ideas: instead of uniform eviction for KV cache hit, allocate a certain budget per attention heads to dynamically evict certain heads

built on-top of PyramidKV and SnapKV

Note

With Ada-SnapKV, each attention layers are still assigned with a fixed compression rate (refer to the image example)

See also paper![]() (Feng et al., 2024)

(Feng et al., 2024)

KIVI

link: github

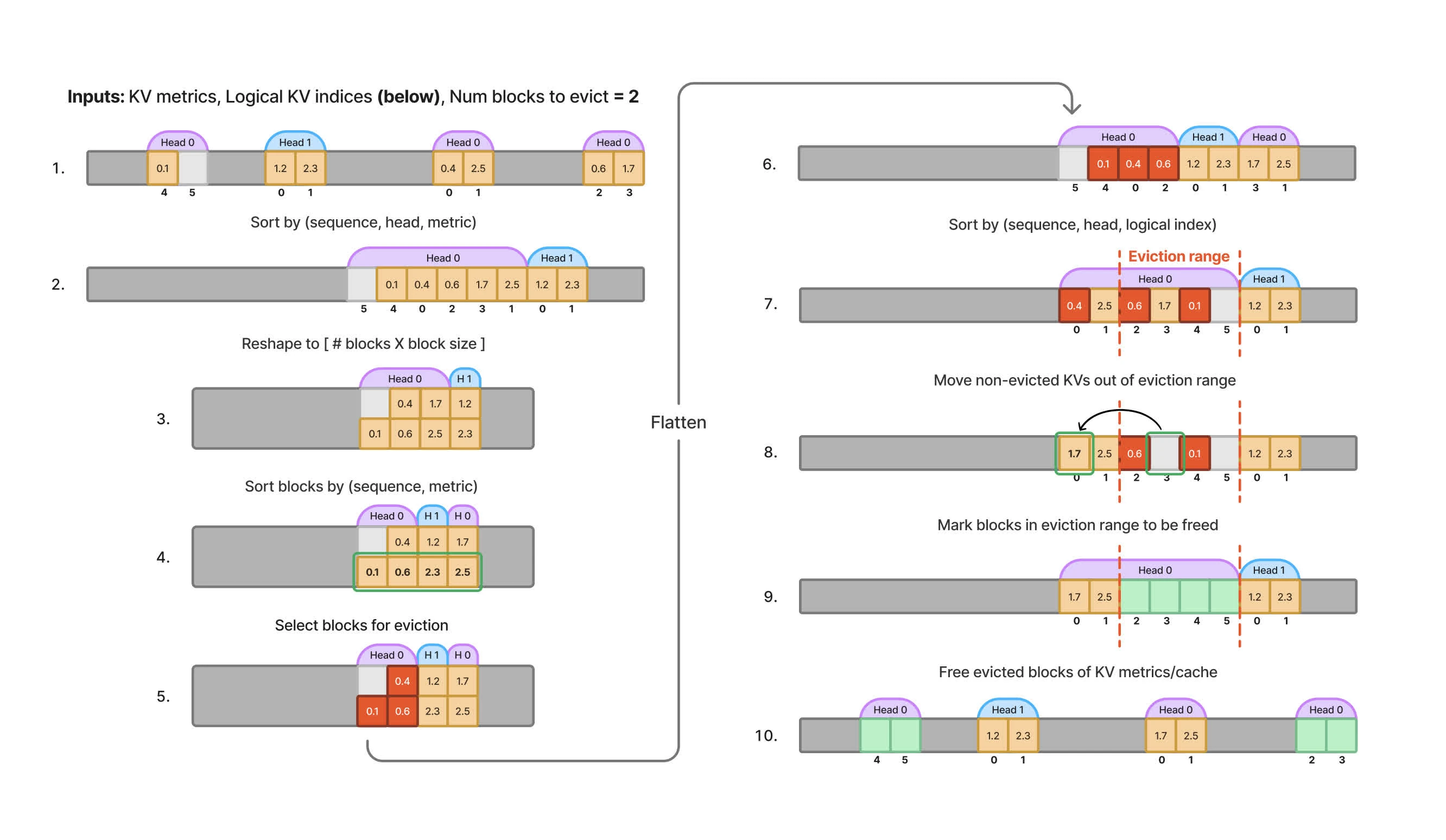

KV-Compress

variable compression rates per attention head

source: github

idea.

Look at past attention weights for each pair of key and value vectors (a measure of the degree with which that KV’s representation has been queried during past attention operations)

Then select the KV with the least attention to evict

Think of LFU (least frequency used) cache management policy

the KV cache for each sequence in a particular layer is allocated on the GPU as a # attention heads sequence length tensor.

Lien vers l'originalImportant

total memory allocation scales with the maximum sequence length for all attention heads of the KV cache

Notes

A variation of Ada-SnapKV

Motivation:

- group-query-compression: compress KV-cache of GQA without repeating it into the dimension of query heads.

- Modified

PagedAttentionthat compute against KV-cache (contains variable numbers of KVs per head)

For vLLM, each cache block stores KV for every attention head of every layer

For KV-Compress, each block only holds KVs for a single head. Block tables are expanded so that unique block for each specific KV head and layer can be retrieved

Query-Group Compression (QGC)

KV compression algorithm doesn’t have GQA design in mind.

- Pyramid-KV cache and compress KV after repetition for alignment with query tensors

- Redundancy in cache before compression

modification of eviction-based methods per groups

Block layout and allocation

idea: adapt PagedAttention to page out cache on a per-head, per-layer–as well as per sequence–basis

explanation

A simplified example with two KV heads and a block size of two:

- KV metrics are visualized for a given cache state, highlighting blocks of a particular sequence in the decoding batch that is scheduled to evict two blocks.

- Logical indices are displayed under the corresponding metrics slot.

Evict from Paged KV cache

Lien vers l'originalneed to evict KV blocks instead of evict single KV attention

Bibliographie

- Cai, Z., Zhang, Y., Gao, B., Liu, Y., Liu, T., Lu, K., Xiong, W., Dong, Y., Chang, B., Hu, J., & Xiao, W. (2024). PyramidKV: Dynamic KV Cache Compression based on Pyramidal Information Funneling. arXiv preprint arXiv:2406.02069 [arXiv]

- Feng, Y., Lv, J., Cao, Y., Xie, X., & Zhou, S. K. (2024). Ada-KV: Optimizing KV Cache Eviction by Adaptive Budget Allocation for Efficient LLM Inference. arXiv preprint arXiv:2407.11550 [arXiv]

- Ge, S., Zhang, Y., Liu, L., Zhang, M., Han, J., & Gao, J. (2024). Model Tells You What to Discard: Adaptive KV Cache Compression for LLMs. arXiv preprint arXiv:2310.01801 [arXiv]

- Li, Y., Huang, Y., Yang, B., Venkitesh, B., Locatelli, A., Ye, H., Cai, T., Lewis, P., & Chen, D. (2024). SnapKV: LLM Knows What You are Looking for Before Generation. arXiv preprint arXiv:2404.14469 [arXiv]

- Liu, Z., Desai, A., Liao, F., Wang, W., Xie, V., Xu, Z., Kyrillidis, A., & Shrivastava, A. (2023). Scissorhands: Exploiting the Persistence of Importance Hypothesis for LLM KV Cache Compression at Test Time. arXiv preprint arXiv:2305.17118 [arXiv]

- Xiao, G., Tian, Y., Chen, B., Han, S., & Lewis, M. (2024). Efficient Streaming Language Models with Attention Sinks. arXiv preprint arXiv:2309.17453 [arXiv]

- Zhang, Z., Sheng, Y., Zhou, T., Chen, T., Zheng, L., Cai, R., Song, Z., Tian, Y., Ré, C., Barrett, C., Wang, Z., & Chen, B. (2023). H₂O: Heavy-Hitter Oracle for Efficient Generative Inference of Large Language Models. arXiv preprint arXiv:2306.14048 [arXiv]