Attention operates on a sequence of query , key and value vector. Attention matrix of a sequence then computed as:

We can probably arrange the attention function (composed of multiple attention-heads) according to (Elhage et al., 2021):

where the learn-able weight matrices and , is the dimension per head, are combined OV matrix

Muti-head Attention

Allows the model to jointly attend to information from different representation subspaces at different positions:

Group-Query Attention

by (Ainslie et al., 2023)

idea: reduce number of KV heads to a fraction of number of query heads (evenly dividing the query heads into groups with heads)

RadixAttention

Implemented in (Zheng et al., 2024) where they maintain a LRU eviction policy to maintain relevant KV cache for all requests within a radix tree

radix tree setup:

- key: sequence of tokens

- value: KV cache tensor (stored in GPU in a paged layout)

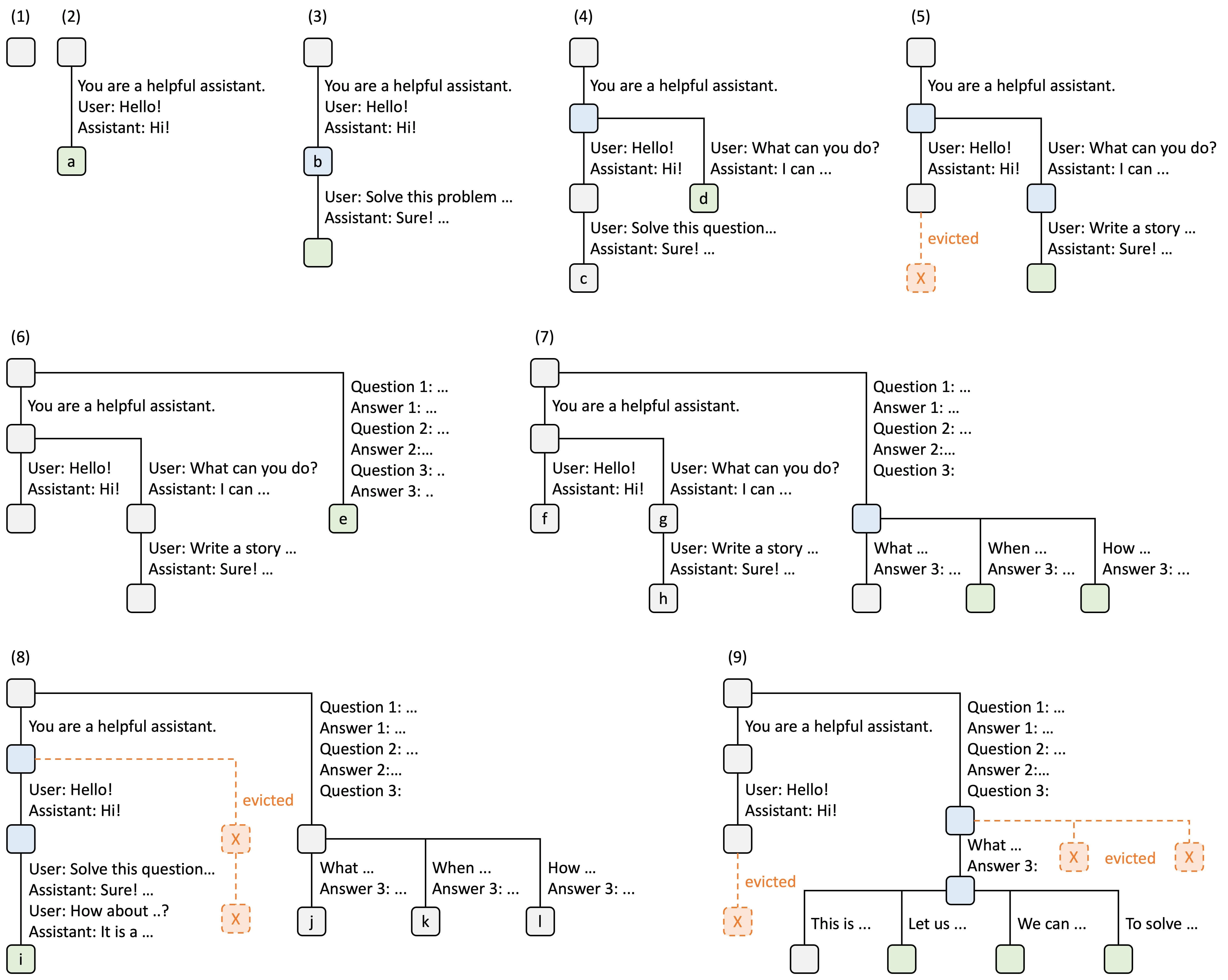

dynamic evolution of the radix tree in response to various requests.

explanation of RadixAttention with LRU eviction policy

These requests include two chat ses,sions, a batch of few-shot learning inquiries, and a self-consistency sampling. Each tree edge carries a label denoting a substring or a sequence of tokens. The nodes are color-coded to reflect different states: green for newly added nodes, blue for cached nodes accessed during the time point, and red for nodes that have been evicted.

cache-aware scheduling

We define the hit rate as

in batch settings: sort requests by matching prefix length and prioritise one with longer matched prefixes instead of FIFO schedule.

Algorithm 1 Cache-Aware Scheduling

Input: Radix tree , Memory pool .

Input: current running batch , waiting queue .

Output: Finished requests and updated system state.

// Get all requests from the waiting queue

requests

// Search for prefix matching for all waiting request

for req requests do

req.prefix_node, req.prefix_len T.match_prefix(req.input_tokens)

end for

// Sort the request according to matched prefix lengths

requests.sort()

// Select requests for the next batch

available_size T.evictable_size() + P.available_size()

current_size 0

new_batch []

for req requests do

if req.size() + current_size available_size then

new_batch.append(req)

available_size available_size +

end if

end for

Q.remove_requests(new_batch)

// Insert requests into the current running batch

B.merge(new_batch)

// Allocate new memory and do eviction if necessary

needed_size B.needed_size()

success, buffer P.alloc(needed_size)

if then

T.evict(needed_size)

success, buffer P.alloc(needed_size)

end if

B.run(buffer)

// Process finished requests

finished_requests B.drop_finished_requests()

for req finished_requests do

T.decrease_ref_counter(req.prefix_node)

T.insert(req)

end for

return finished_requests

We got lower bound:

Consider we visit radix tree in DFS order. For each edge of , the first time we compute KV cache associated with , then we will compute the whole subtree of .

During computation of subtree, then edge will be continuously hit, thus no additional computation will happen.

cache hit

with cache size maximum request length (which will equals to longest path in radix tree), edge WILL NOT be evicted during computation of its subtree since the common prefix including of the subtree will be continuously hit.

We can show that longest-shared-prefix-first order is equivalent to DFS order by induction 1

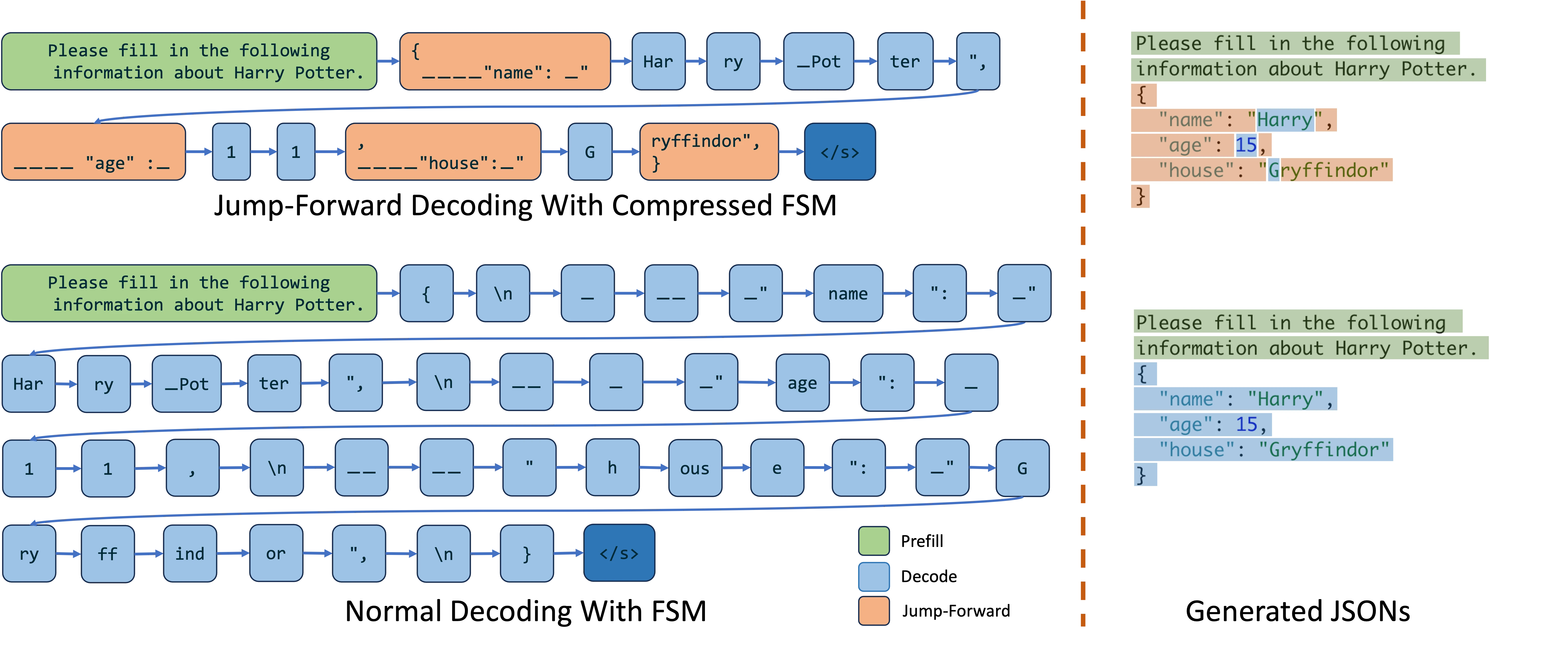

compressed FSM for jump-ahead tokens.

Implemented in (Zheng et al., 2024)

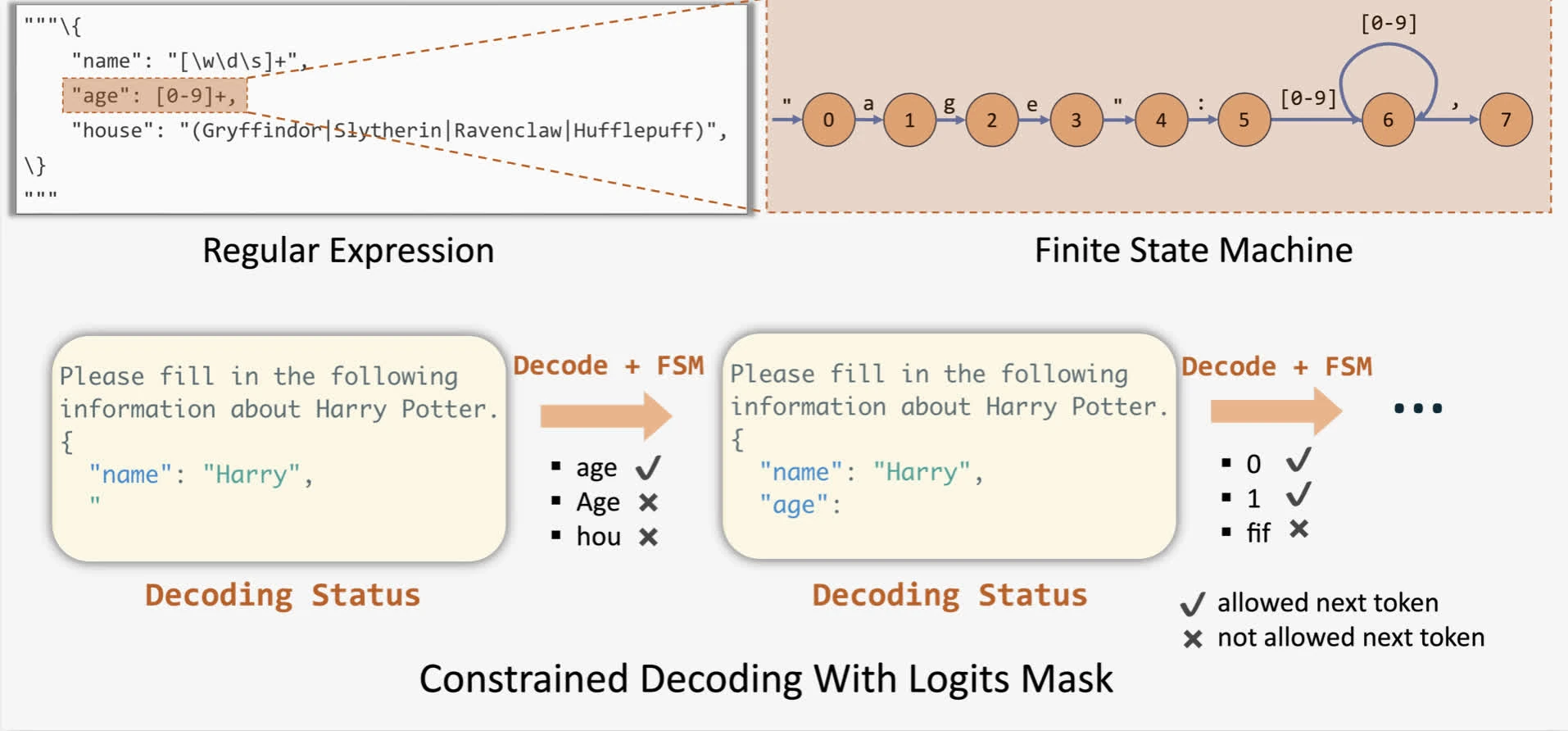

Method 1: FSM-based decoding

intuition: Using FSM (Willard & Louf, 2023) to guide generations by increasing logit bias for tokens that conform to given JSON schema. This allows us to track the current state during decoding and filter out invalid tokens by applying logit bias to the output.

limitation: we can see that given construction of FSM requires token-level access, it can only transition the state by only one token at a time, resulting in slow decoding.

Method 2: Interleaved-based

intuition: breaks down JSON schemas, each containing either a chunk prefill part or constrained decoding part. They are then executed interleaved by inference system. Faster than per-token decoding given that chunked prefill components can process multiple tokens per forward pass

See also https://github.com/guidance-ai/guidance#guidance-acceleration using llama.cpp as backend.

limitation:

- interleaved-based require custom syntax, making it less expressive compared to regex.

- struggles to deal with tokenization boundaries due to conflicts between decode and chunked prefill segments.

- frequent communications between interpreter and back-end adds additional overhead.

Method 3: Jump-Forward Decoding with compressed FSM

tokenization boundary handling

During decoding, it is preferred to combine multiple characters into a single tokens.

For example, when decoding

"Hello"in context of JSON decoding, LLM might output the following token",He,llo,",This may cause some strange behaviour if we combine the last

"with,(this regex"[\w\d\s]*"with the last,will lead to endless decoding because this token",is not valid even if the LM wants to stop.)Fix:

Lien vers l'original

- implement re-tokenization mechanism during jump-forward phase (append string instead of the tokens, followed with re-tokenization of the entire text) add approximately 4% of overhead

- use a comprehensive regex to guide the decoding phase, instead of employing multiple concatenated regex 2

RingAttention

RazorAttention

Paged Attention

by (Kwon et al., 2023)

Used in conjunction with continuous batching, implemented through vLLM

Reduce memory usage of attention mechanism by swapping kv-cache in and out of memory. A block manager is similar to those of virtual memory in OS.

Essentially, it’s a form of paging, such that attention can be stored in contiguous memory. Partitions the KV cache of each sequence into KV blocks.

Another optimization is to use KV compression to reduce the size of the KV cache for longer context.

Given:

- each block contains KV vectors for fixed number of tokens, denoted as block size .

- Key block

- Value block

where is row vector of attention score on j-th KV block.

Bibliographie

- Ainslie, J., Lee-Thorp, J., de Jong, M., Zemlyanskiy, Y., Lebrón, F., & Sanghai, S. (2023). GQA: Training Generalized Multi-Query Transformer Models from Multi-Head Checkpoints. arXiv preprint arXiv:2305.13245 [arXiv]

- Elhage, N., Nanda, N., Olsson, C., Henighan, T., Joseph, N., Mann, B., Askell, A., Bai, Y., Chen, A., Conerly, T., DasSarma, N., Drain, D., Ganguli, D., Hatfield-Dodds, Z., Hernandez, D., Jones, A., Kernion, J., Lovitt, L., Ndousse, K., … Olah, C. (2021). A Mathematical Framework for Transformer Circuits. Transformer Circuits Thread.

- Kwon, W., Li, Z., Zhuang, S., Sheng, Y., Zheng, L., Yu, C. H., Gonzalez, J. E., Zhang, H., & Stoica, I. (2023). Efficient Memory Management for Large Language Model Serving with PagedAttention. Proceedings of the ACM SIGOPS 29th Symposium on Operating Systems Principles.

- Liu, H., Zaharia, M., & Abbeel, P. (2023). Ring Attention with Blockwise Transformers for Near-Infinite Context. arXiv preprint arXiv:2310.01889 [arXiv]

- Tang, H., Lin, Y., Lin, J., Han, Q., Hong, S., Yao, Y., & Wang, G. (2024). RazorAttention: Efficient KV Cache Compression Through Retrieval Heads. arXiv preprint arXiv:2407.15891 [arXiv]

- Vaswani, A., Shazeer, N., Parmar, N., Uszkoreit, J., Jones, L., Gomez, A. N., Kaiser, L., & Polosukhin, I. (2023). Attention Is All You Need. arXiv preprint arXiv:1706.03762 [arXiv]

- Zheng, L., Yin, L., Xie, Z., Sun, C., Huang, J., Yu, C. H., Cao, S., Kozyrakis, C., Stoica, I., Gonzalez, J. E., Barrett, C., & Sheng, Y. (2024). SGLang: Efficient Execution of Structured Language Model Programs. arXiv preprint arXiv:2312.07104 [arXiv]

Remarque

-

base: a random request correspond to node will be processed.

- All requests correspond to nodes on path doesn’t need recomputation.

- Thus, computation complexity for requests of nodes is aligned with DFS

induction: assume we visit node , and the visited node align with DFS order. Let denote path of .

- Each node that has not been visited has the lowest common ancestor with visited nodes on .

- Since nodes on are cached, a node that has yet to be visited with lowest common accestor on will have the longest shared prefix

- longest-shared-prefix-first order will select , which is a valid DFS q.e.d