See also jupyter notebook, pdf, solutions

question 1.

problem 1.

part 1

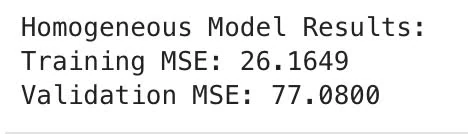

- Divide the dataset into three parts: 1800 samples for training, 200 samples for validation, and 200 samples for testing. Perform linear OLS (without regularization) on the training samples twice—first with a homogeneous model (i.e., where the y-intercepts are zero) and then with a non-homogeneous model (allowing for a non-zero y-intercept). Report the MSE on both the training data and the validation data for each model

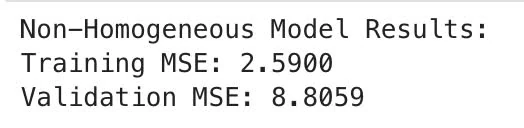

- Compare the results. Which approach performs better? Why? Apply the better-performing approach to the test set and report the MSE.

- Do you observe significant overfitting in any of the cases?

-

For homogeneous model, the MSE on training data is 26.1649 and on validation data is 77.0800

Whereas with non-homogeneous model, the MSE on training data is 2.5900 and on validation data is 8.8059

-

We can observe that non-homogeneous model clearly performs better than the homogeneous models, given a significantly lower MSE (indicates that predictions are closer to the actual value). We can also see the difference between training and validation sets for non-homogeneous models shows better consistency, or better generalisation.

Test set MSE for non-homogeneous model is 2.5900

-

We observe in both cases that the training MSE is significantly lower than the validation MSE, indicating overfitting. The non-homogeneous model shows a lower difference between training and validation MSE, which suggest there were some overfitting. The homogeneous models show more severe overfitting due to its constraints (forcing intercept to zero).

part 2

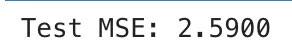

- Divide the dataset into three parts: 200 samples for training, 1800 samples for validation, and 200 samples for testing. Perform linear OLS (without regularization) on the training samples twice—first with a homogeneous model (i.e., where the y-intercepts are zero) and then with a non-homogeneous model (allowing for a non-zero y-intercept). Report the MSE on both the training data and the validation data for each model

- Compare these results with those from the previous part. Do you observe less overfitting or more overfitting? How did you arrive at this conclusion?

-

For homogeneous model, the MSE on training data is 0.000 and on validation data is 151.2655

Whereas with non-homogeneous model, the MSE on training data is 0.000 and on validation data is 15.8158

-

We observe an increased in overfitting, given the perfit fit in training data versus validation MSE for both model. We can still see that non-homogeneous models outperform homogeneous models, but the difference between training and validation MSE is significantly higher than the previous case.

This is largely due to smaller training set (200 training samples versus 1800 training samples), models have less data to train on.

problem 2.

part 1

Divide the Dataset into Three Parts:

Training Data: Select 200 data points.

Validation Data: Assign 1800 data points.

Testing Data: Set aside the remaining 200 data points for testing.

- Run Regularized Least Squares (non-homogeneous) using 200 training data points. Choose various values of lambda within the range

{exp(-2), exp(-1.5), exp(-1), …, exp(3.5), exp(4)}. This corresponds to values ranging from exp(-2) to exp(4) with a step size of 0.5. For each value of , Run Regularized Least Squares (non-homogeneous) using 200 training data points. Compute the Training MSE and Validation MSE.- Plot the Training MSE and Validation MSE as functions of lambda.

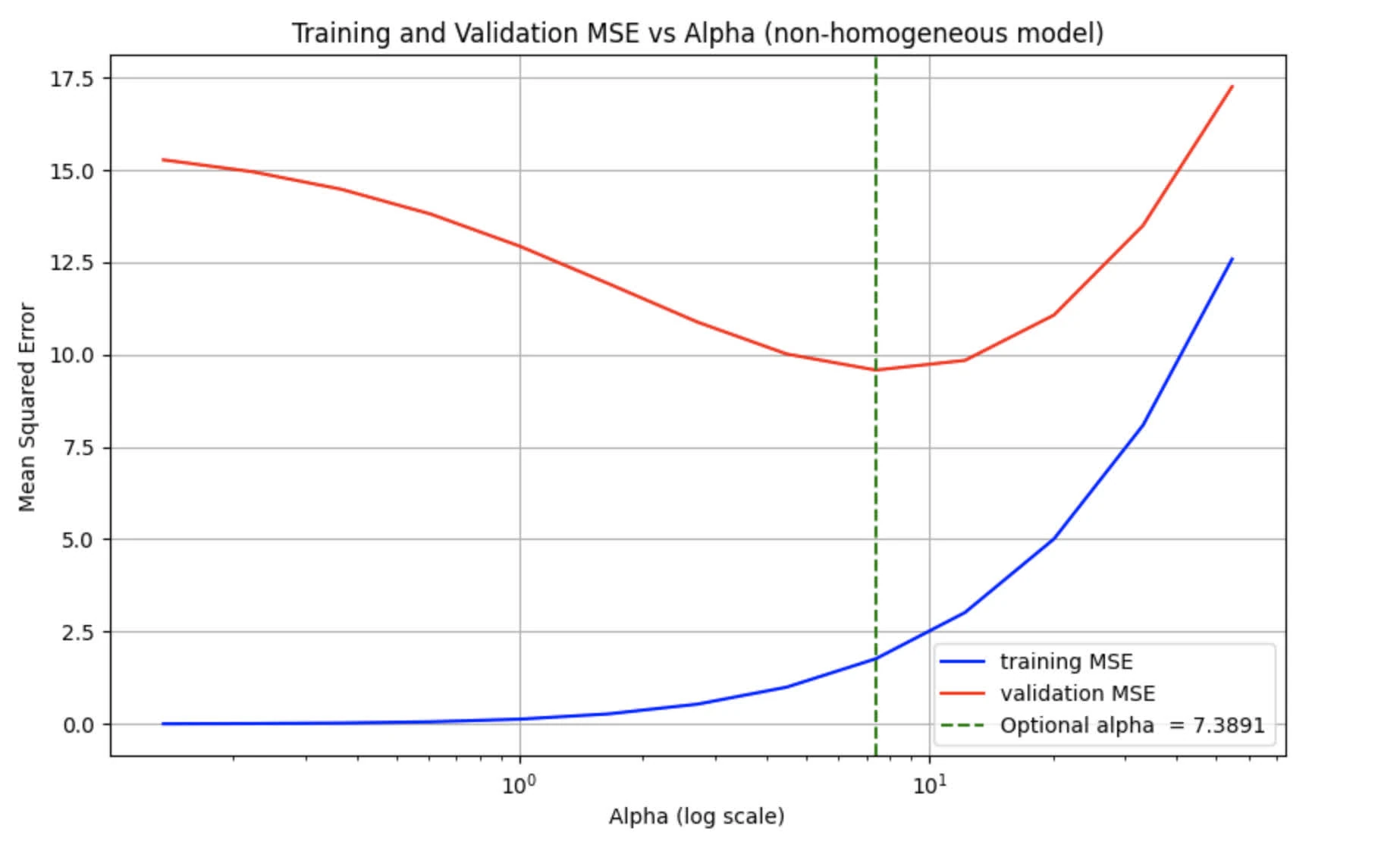

The following is the graph for Training and Validation MSE as functions of lambda.

part 2

- What is the best value for lambda? Why?

- Use the best value of lambda to report the results on the test set.

-

Best would be the one corresponding to lowest point on the validation MSE curve, as it is the one that minimizes the validation MSE. From the graph, we observe it is around

-

Using , we get the following Test MSE around 1.3947

problem 3.

part 1

Choose a preprocessing approach (i.e., select a mapping) that transforms the 900-dimensional data points (900 pixels) into a new space. This new space can be either lower-dimensional or higher-dimensional. Clearly explain your preprocessing approach.

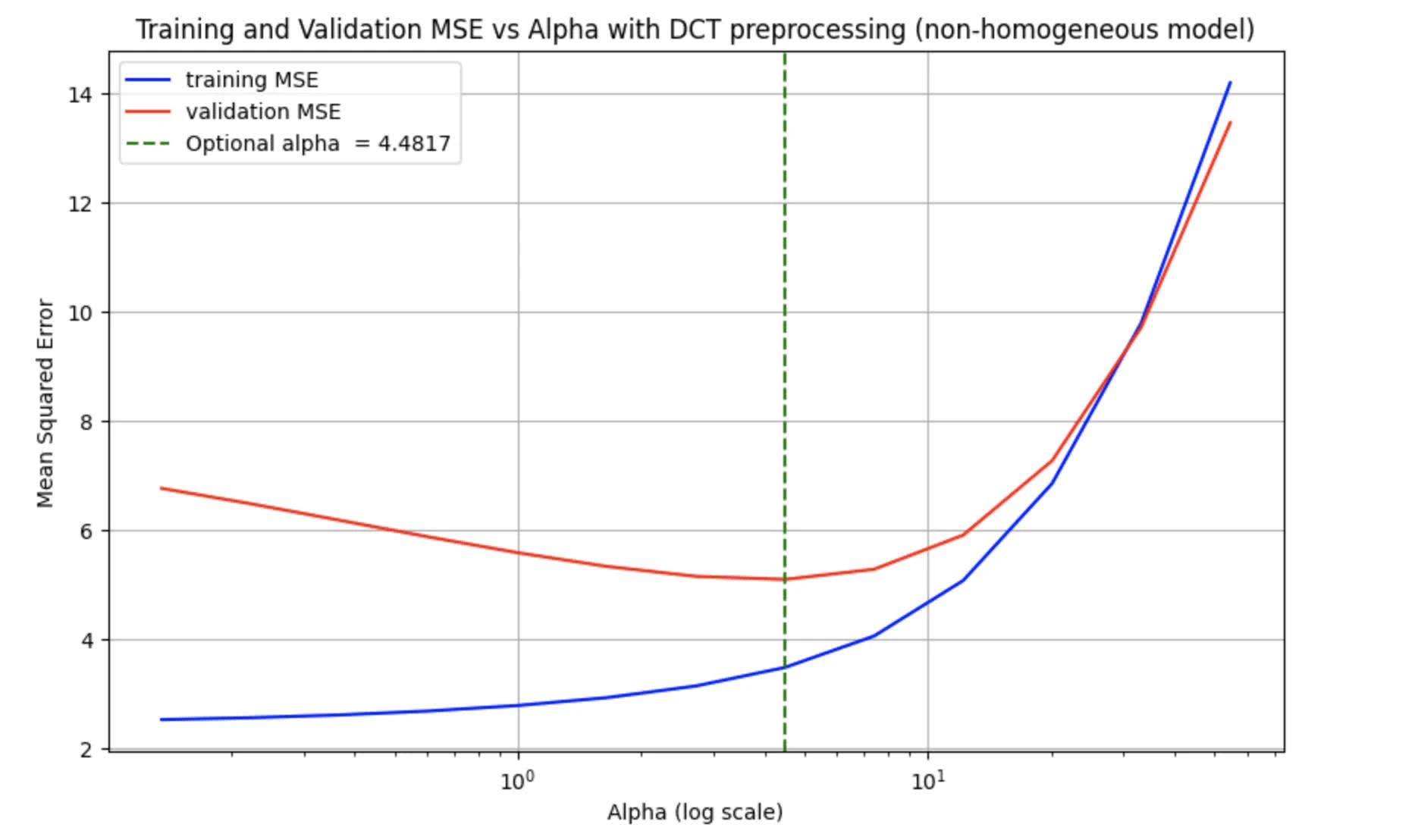

We will use 2D Discrete Cosine Transform (DCT) to transform our data, followed by feature selection to reduce dimensionality by selecting a top-k coefficient.

Reason:

- DCT is mostly used in image compression (think of JPEG). Transform image from spatial to frequency domain.

- Reduce dimensionality to help with overfitting, given we will only use 200 samples for training.

In this case, we will choose n_coeffs=100

part 2

implement your preprocessing approach.

See the jupyter notebook for more information

part 3

Report the MSE on the training and validation sets for different values of lambda and plot it. As mentioned, it should perform better for getting points. choose the best value of lambda, apply your preprocessing approach to the test set, and then report the MSE after running RLS.

The following graph shows the Training and Validation MSE as functions of . The optimal alpha is found to be

The given Test MSE is found to be around 3.2911

question 2.

problem statement

In this question, we will use least squares to find the best line () that fits a non-linear function, namedly

For this, assume that you are given a set of training point .

Find a line (i.e ) that fits the training data the best when . Write down your calculations as well as the final values for and .

Additional notes: assumption basically means that we are dealing with an integral rather than a finite summation. You can also assume is uniformly distributed on [0, 1]

We need to minimize sum of squared errors:

We can compute :

Compute :

Therefore we can compute covariance:

Slope and intercept can the be computed as:

Thus, the best-fitting line is

question 3.

problem statement

In this question, we would like to fit a line with zero y-intercept () to the curve . However, instead of minimising the sume of squares of errors, we want to minimise the folowing objective function:

Assume that the distribution of is uniform on [2, 4]. What is the optimal value for ? Show your work.

asumption: log base 10

We need to minimize the objective function

where and

Given is uniformly distributed on [2, 4], we can express the sum as integral:

let , we can rewrite the objective function as:

Compute each integral:

Given we only interested in finding optimal , we find the partial derivatives of given objective function:

Set to zero to find minimum :

Therefore,

Thus, optimal value for a s