stateless, and usually have no feedback loop.

universal approximation theorem

see also pdf (Cybenko, 1989)

idea: a single sigmoid activation functions in FFN can approximate closely any given probability distributions.

regression

Think of just a linear layers with some activation functions

import torch.optim as optim

import torch.nn as nn

class LinearRegression(nn.Module):

def __init__(self, input_dim, output_dim):

super().__init__()

self.fc = nn.Linear(input_dim, output_dim)

def forward(self, x): return self.fc(x)

model = LinearRegression(224, 10)

loss = nn.MSELoss()

optimizer = optim.SGD(model.parameters(), lr=0.005)

for ep in range(10):

y_pred = model(X)

l = loss(Y, y_pred)

l.backward()

optimizer.setp()

optimzer.zero_grad()classification

Think of one-hot encoding (binary or multiclass) cases

backpropagation

context: using SGD we can compute the gradient:

This is expensive, given that for deep model this is repetitive!

intuition: we want to minimize the error and optimized the saved weights learned through one forward pass.

vanishing gradient

happens in deeper network wrt the partial derivatives

because we applies the chain rule and propagating error signals backward from the output layer through all the hidden layers to the input, in very deep networks, this involves successive multiplication of gradients from each layer.

thus the saturated neurons , thus gradient does not reach the first layers.

solution:

- we can probably use activation functions (Leaky ReLU)

- better initialisation

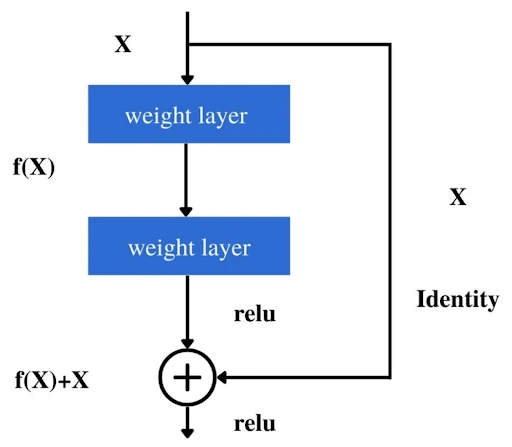

- residual network

regularization

usually prone to overfitting given they are often over-parameterized

- We can usually add regularization terms to the objective functions

- Early stopping

- Adding noise

- structural regularization, via adding dropout

dropout

a case of structural regularization

a technique of randomly drop each node with probability

Lien vers l'original

Bibliographie

- Cybenko, G. (1989). Approximation by superpositions of a sigmoidal function. Mathematics of Control, Signals, and Systems, 2(4), 303–314.