See also: this talk I gave at Hack the North 2023.

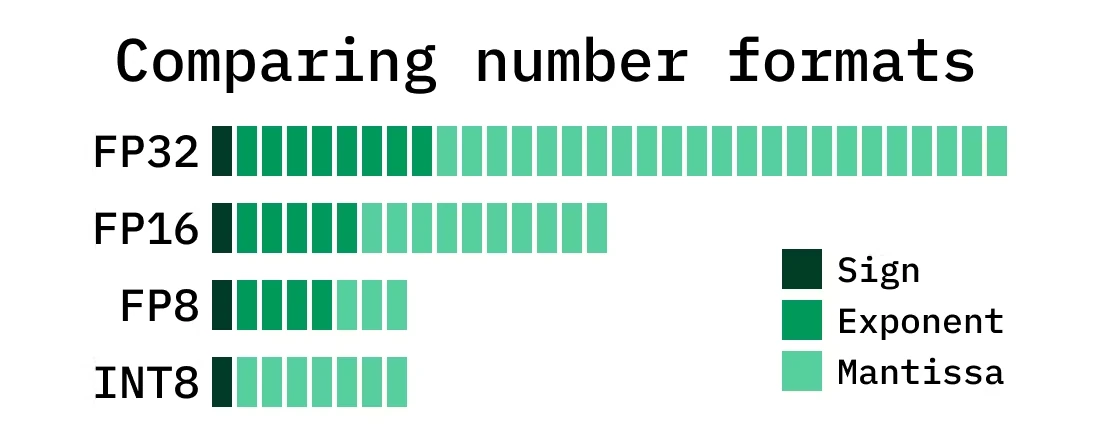

reduce computational and memory costs of running inference with representing the weight and activations with low-precision data type

int16- half precisionbfloat16int8fp8

Note

This also applies to post-training quantization, where the methodology is applied after the model has been trained, instead of during load-time.

metrics for calibration

the idea is to compare the difference between two probability distribution when scaling, for example from int16 to int8

KL calibration

fp32 to fp16

Does my operation support

fp16?

- CPU does support saving

fp16weights, but computations are done infp32

Does my operation sensitive to

fp16?

For example epsilon in LayerNormalization usually is very small , but smallest value in fp16 is , which cause NaN issues.

fp32 to int8

Consider a float x in [a, b], such that affine quantization scheme:

where:

- is the quantized

int8associated withx - and are scaling and zero-point parameters

- is the scale, positive

float32 - is the zero-point, or the

int8value corresponding to value0infp32

- is the scale, positive

Thus quantized value is:

And fp32 value outside of [a, b] is clipped to closest representable value.

See also: paper![]()

quantization time

- Post-training dynamic quantization: range of each activation is computed on the fly at runtime

- Post-training static quantization: range of each activation is computed offline before runtime

- Observers are put on activations to collect their value

- certain number of forward passes on calibration datasets

- range of each computation are computed according to some calibration technique

- Quantization aware training: range of each activation is computed during training

fake_quantizeoperations are inserted in the computation graphfake_quantizeis a no-op during inference, but during training, it simulates the effect of quantization

Methods and libraries

bitsandbytes and GPTQ![]()